The processing pipeline of the text in Sphinx

the

Picture

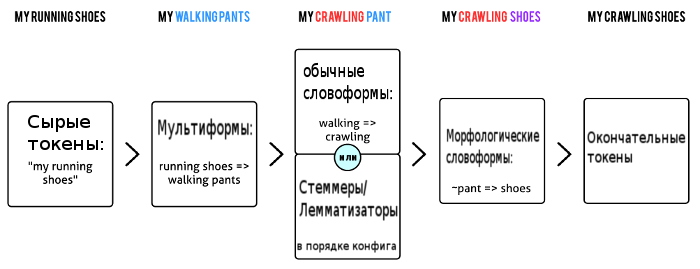

Simplified processing pipeline text (in engine version 2.x) looks like this:

Looks simple enough, but the devil is in the details. There are several very different filters (which are applied in a special manner); tokenizer does something else in addition to splitting the text into words; and finally under "etc" in the block morphology is actually at least three different versions.

Therefore, the more accurate will be the next picture:

the

regular expression Filters

This is an optional step. Essentially it is a set of regular expressions that are applied to documents and queries submitted to the Sphinx, and nothing more! So it's just syntactic sugar, but quite convenient: using regexps Sphinx handles everything, and without them you'd have to write a separate script to load data into Sphinx, then another one to fix the queries, and both scripts would need to keep synchronized. But inside the Sphinx, we just run all filters on the fields and requests before any further processing. All! A more detailed description is in section regexp_filter documentation.

the

HTML Stripper

This is also an optional step. This handler is only connected if the configuration source is specified Directive html_strip. This filter works immediately after the filter regular expressions. The stripper removes from the input text all HTML tags. In addition, it is able to retrieve and to index individual attributes of these tags (see html_index_attrs) and remove text between tags (see html_remove_elements). Finally, because the area in the documents and paragraphs use the same markup, SGML, stripper performs determination of boundaries of zones and paragraphs (see index_sp and index_zones). (Otherwise, you would have to do another exact same passage in the document in order to solve this problem. Inefficient!)

the

Tokenization

This step is required. Whatever happens, we need to split the phrase "Mary had a little lamb" into individual keywords. This is the essence of tokenization to transform a text field into many keywords. It would seem, what could be easier?

All true, except for the fact that a simple division into words by using spaces and punctuation marks does not always work, and because we have a set of parameters that control the tokenization.

First, there are the tricky characters that both "characters" and "characters", and even more, can simultaneously be a "character", "whitespace" and "punctuation" (which at first glance can also be interpreted as a space, but in fact impossible). To combat all these problems the settings charset_table, blend_chars, ignore_chars and ngram_chars.

By default, tokenizer of the Sphinx treats all unknown characters as whitespace. Because whatever crazy unikalnuyu graphics you would not "gash" in your document, it will be indexed just as a space. All characters mentioned in charset_table, are treated as normal characters. Also charset_table allows you to display one character in the other: usually it is used to bring the characters to the same case for removing diacritics, or all together. In most cases this is enough: bring famous characters to charset_table content; all unknown model (including punctuation) spaces, tokenization is ready.

However, there are three significant exceptions.

the

-

the

- Sometimes a text editor (e.g. Word) inserts a soft hyphen characters directly into the text! And if you don't ignore them entirely (instead of just replace with spaces), the text will be indexed as "ma ry had a lit t le lamb". To resolve this problem, use ignore_chars. the

- Oriental languages with the characters. They are not significant gaps! Therefore, the limited support for texts in CJK (Chinese, Japanese, Korean) in the kernel you can specify ngram_chars, and then each such symbol will be considered as the individual word, as if surrounded by white space (even if in fact it is not). the

- To tricky characters like & or. we really don'T KNOW in the process of word-breaking, whether we want them to index, or to delete it. For example, in the phrase "Jeeves &Wooster" sign & it is possible to remove. But in AT&T — no way! Also it is impossible to spoil "Marwel''s Agents of S. H. I. E. L. D". To do this, the Sphinx, you can specify a list of characters blend_chars Directive. The characters on this list will be processed two ways: as characters, and as spaces. Notice how simple the turnover of the regular symbols can lead to generate many tokens when the game enters the list blend_chars: for example, the field "Back to the U. S. S. R" will be, as usual, divided into the tokens back to the u s s r, as usual, but also another token "u.s.s.r" will be indexed in the same position as the "u" in the base division.

And all of this is happening already with the most basic elements of the text symbols! Scared?!

In addition, tokenizer (oddly enough) knows how to work with exceptions (exceptions) (such as C++ or C# — where special. symbols have meaning only in these keywords and can be completely ignored in all other cases), and in addition he is able to determine the boundaries of sentences (if defined Directive index_sp). This problem cannot be solved later, because, after tokenization we have no specials. characters or punctuation. Also it is not necessary to engage in the earlier stages, because, again, 3 passes at one and the same text to make him over 4 operations this is worse than the one which immediately will put everything in its place.

Inside tokenizer designed so that exceptions work before the rest of the. In this sense they are very similar to filters, regular expressions (and, moreover, they can be emulated with regular expressions. We're talking "it is possible", however, they never tried: actually exceptions work much easier and faster. Want to add another regexp? OK, this will lead to even one passage in the text fields. But all the exceptions are applied on a single passage of tokenizer and take 15-20% of the time tokenization (making a total of 2-5% of the total time of indexing).

Determination of sentence boundaries is defined in the code of tokenizer and there's nothing you can't configure (and not necessary). Simply plug in and expect everything to work (it usually does; but who knows, maybe there'll be some strange edge cases).

So, if you take a relatively harmless point, and will enter her first with one exception, and in blend_chars, and put index_sp=1 — you risk to stir up a whole hornet's nest (fortunately, not extending beyond tokenizer). Again, on the outside everything "just works" (although if you include ALL of the above options, and then try to index some strange text which will trigger all at the same time conditions, and thus will awaken Cthulhu is their own fault!)

From this point we have tokens! And all the later phases of processing are dealing with individual tokens. Go!

the

Wordforms and morphology

Both steps are optional; both are disabled by default. More interestingly, inflectional and morphological processors (stemmery and lemmatization) in some way interchangeable, and therefore we consider them together.

Every word created by tokenizer, is processed separately. There are several different processors: stupid, but still something where the popular Soundex and Metaphone to classical Stamenov porter, including the libstemmer library, and a full vocabulary of lemmatization. All handlers generally take one word and replace it with the specified normalized form. So far so good.

And now the details: morphological handlers are used in exactly the same order as mentioned in the config, as long as the call will not be processed. That is, as soon as the word was modified by one of the handlers — all, the processing sequence ends, and all subsequent handlers won't be invoked. For example, in the chain morphology = stem_en, stem_fr English stemmer will have the advantage; and in the chain morphology = stem_fr,stem_en French. And in the chain morphology = soundex, stem_en mention English stemmer essentially useless, since soundex transforms all the English words before stammer to reach them. An important regional effect of this behavior is that if the word is already in normal form, and this is found one of Stamenov (but of course, nothing did not change), it will be processed the subsequent stemmery.

Next. Usual word forms is implicit morphological processor the highest priority. If set to word forms, the words in the first place are handled by them, and fall into handlers morphology only if no conversion happened. Thus any nasty bug of stemmer or lemmatizer can be corrected with the help of word-forms. For example, English, stemmer quotes the words "business" and "busy" to the same basis, "busi". And this is easily fixed by adding one line, "business => business" in various forms. (and Yes, notice the word even more than morphology, because in that case the fact of the replacement of the word, no matter what it is, in fact, has not changed).

The above mentioned "normal forms". And here's why: there are three different types of word forms.

Common variants. They display tokens of 1:1 and in some way replace morphology (we just mentioned it)

Morphological word forms. You can replace all running to walking, the only place the "~run => walk" instead of many rules about "running", "running", "ran", "ran" etc., and if in English, such variants can be as much, in some others, like our Russian one basis can be tens or even hundreds of different inflections. Morphological word forms are applied after the handler morphology. And they still display the words 1:1

multi-forms can. They display the words M:N. In General, they work as a normal substitution and executed at the earliest possible stage. Most multi-forms can just imagine how some early replacements. In this sense, they are sort regexpal or exceptions, however, apply to other stage and therefore ignore punctuation. Note that after applying multi-form, the resulting tokens are subject to all other morphological treatments, including normal 1:1 the word forms!

Consider this example:

the

morphology = stem_en wordforms = myforms.txt myforms.txt: walking => crawling running shoes => walking pants ~pant => shoes

Suppose we index the document "my running shoes" with these strange settings. What will be the result in the index?

the

-

the

- First, we get three tokens — "my" "running" "shoes". the

- and Then apply multi-form and converts it to "my" "walking" "pants". the

- Morphological processor (English stemmer) will handle "my" and "pants" (because "walking" is already processed in the normal word) and give "my" "crawling" "pant" the

- Finally, the morphological word form will display all the word forms in pant shoes. The end result is the token "my" "crawling" "shoes" and will be saved in the index.

Sounds solid. However, as mere mortals in the development of the Sphinx and am not used to debug C++ code, to guess that? Very simple: there is a special command:

the

mysql > call keywords('my running shoes', 'test1');

+------+---------------+------------+

| qpos | tokenized | normalized |

+------+---------------+------------+

| 1 | my | my |

| 2 | running shoes | crawling |

| 3 | running shoes | shoes |

+------+---------------+------------+

3 rows in set (0.00 sec)

and at the end of this section illustrate how the morphology and three different kinds of wordforms work together:

the

Words and positions

After all the treatments they have certain positions. Usually they are just numbered sequentially starting with one. However, each position in the document may belong to multiple tokens! This usually occurs when one raw token generates multiple versions of the final word, or with continuous symbols, or lemmatization, or even several ways.

the

the Magic of continuous characters

For example, "AT&T" in the case of continuous characters "&" will be broken into "at" position 1, "t" in position 2, and "at&t" is at position 1.

the

Lemmatization

This is interesting. For example, have the document "the White dove flew away. I dove into the pool." The first occurrence of the word "dove" is a noun. The second is the verb "dive" in the past tense. But examining these words as separate tokens, we have nothing to say about it (and even if we look at multiple tokens at once it is quite hard to make the right decision). In this case, morphology = lemmatize_en_all will lead to the index all options. In this example, positions 2 and 6 are indexed on two different token, so that "dove" and "dive" will be saved.

Positions affect a search with phrases (phrase) and inaccurate phrases (proximity); they also affect the ranking. And the result of any of the four queries — "white dove", "white dive", "dove into" the "dive into" will retrieve the document in a phrase.

the

Stopkova

Step removal stopslow is very simple: we just throw them from the text. However, a few things still need to keep in mind:

1. How can you completely ignore stopslow (instead of just overwrite them with spaces). Even though stopkova and discarded, the positions of the other words remain unchanged. This means that "microsoft office" and "microsoft in the office" in case of ignoring the "in" and "the" as stopslow, will produce a different indexes. In the first document, the word "office" is in position 2. In the second — to position 4. If you want to completely remove stopkova, you can use a Directive stopword_step and set it to 0. This will affect the search phrases and ranking.

2. How to add stopkova a separate form or complete the Lemma. This setting is called stopwords_unstemmed and determined whether the removal stopslow before or after the morphology.

the

What now?

Well, we have almost covered all the typical tasks of everyday text processing. Now you should be clear what is happening there inside how it all works together, and how to configure Sphinx to achieve the desired result. Cheers!

But there's something else. Briefly mention that there is also an option index_exact_words, which prescribes to index the initial token (prior to the application of morphology) in addition to morfologiyu. You also have the option bigram_index, which will force Sphinx to index a couple of words ("a brown fox" will be the tokens "a brown", "brown fox"), and then use them for super fast search for phrases. You can also use plug-ins indexing and querying that will allow you to implement almost any desired processing of the token.

Finally, in the upcoming release of Sphinx 3.0 has plans to unify all these settings instead of the General guidelines that apply to the entire document as a whole, to enable the individual to build the filter chain to process the individual fields. So you could, for example, first remove any stopkova, then apply the word-form, then morphology, then another filter word forms, etc.

Комментарии

Отправить комментарий