A monitoring tool for povedeniem robots on your website

Welcome!

Today I'd like to tell you about his project, which was launched in 2008. Since then, much has changed, as in the architecture of data storage and information processing algorithms.

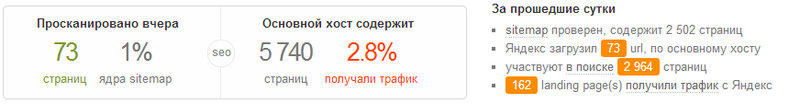

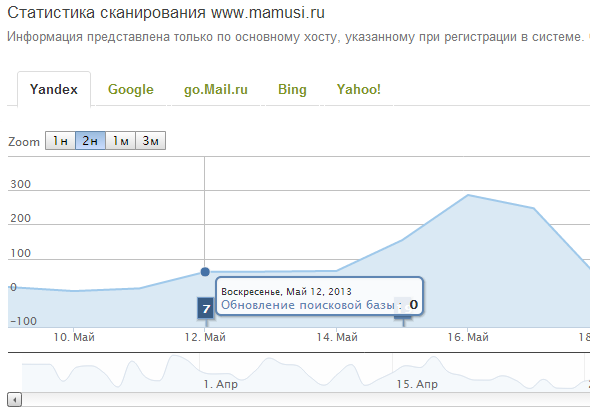

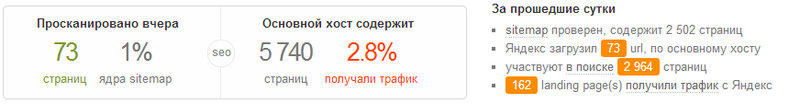

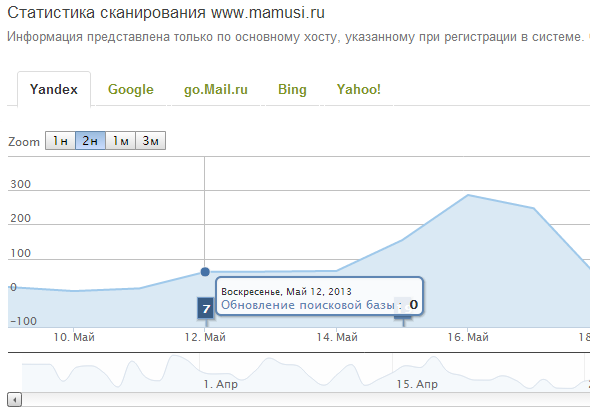

We will focus on the service for SEO professionals and/or ordinary webmasters. BotHunter is a system of passive surveillance (in real time) usergently on your website. Examples of interfaces see below or in the DEMO account on the website (in demo mode, limited functionality). Read on

Given their appetite and the amount of data analyzed, I wrote this service for yourself. For me, more understandable "graphic response" to all questions. Frequent questions that BotHunter will give the answers:

the

I would like to stop those who are now ready to ask the question, "Why? there is Yandex.webmaster and google webmasters ?"

Yes, these services are useful and well-known, BUT they will not give the answer to the following questions:

1. On my site pages that are known by bots, but they are not in Sitemap.XML?

2. On my site the pages visited by a bot, but it never had traffic (want list)?

3. What proportion of URLs is constantly visited by crawlers, but they are not in the search?

4. On my site page, with the same weight in bytes (also a topic for duplicates)?

5. After updating search database (or change the algorithm) such that; how many pages no longer visited by bots? And how many of them are no longer entry points of traffic from organic results?

6. etc.

A list of amusing questions can be continued and each of us this list will be your...

the

In addition to simple and clear reports BotHunter daily checks on the integrity of robots.txt and sitemap.xml files on every website. About sitemap.xml a separate song, the file is checked for validity and conformity with the sitemap Protocol. The system writes a log of all inspections and the facts of the report generation on a daily basis.

the

p.s. regarding performance characteristics, short:

the

The main objective of this post — to your Board.

Any data you would like to receive and in what form?

What ideas would you suggest?

Thank you in advance for constructive criticism...

Article based on information from habrahabr.ru

Today I'd like to tell you about his project, which was launched in 2008. Since then, much has changed, as in the architecture of data storage and information processing algorithms.

We will focus on the service for SEO professionals and/or ordinary webmasters. BotHunter is a system of passive surveillance (in real time) usergently on your website. Examples of interfaces see below or in the DEMO account on the website (in demo mode, limited functionality). Read on

the Backstory

Given their appetite and the amount of data analyzed, I wrote this service for yourself. For me, more understandable "graphic response" to all questions. Frequent questions that BotHunter will give the answers:

the

-

the

- have Visited my site for search engines, when, how often? the

- Who was being parsed my website & when, posing as a search bot? the

- How many pages of my site are loaded by the search robot? the

- How many pages are visited by search crawler, are involved in the search? the

- How many pages are involved in the search, bring traffic?

- How many pages of Sitemap.XML were indexed? the

- a search bot is visiting my site constantly, but is that enough? the

- is it Possible in one system to see information about working with Yandex, Google, Поиск.Mail.ru, Bing, Yahoo! (on the big list of my websites) ? the

- is There a website page that can be "bad" for SEO? the

- , etc., etc.

What is the share landing page(s) visited by the search bot, but they have never been entry points (search engines)? the

There is a ready Bicycle

I would like to stop those who are now ready to ask the question, "Why? there is Yandex.webmaster and google webmasters ?"

Yes, these services are useful and well-known, BUT they will not give the answer to the following questions:

1. On my site pages that are known by bots, but they are not in Sitemap.XML?

2. On my site the pages visited by a bot, but it never had traffic (want list)?

3. What proportion of URLs is constantly visited by crawlers, but they are not in the search?

4. On my site page, with the same weight in bytes (also a topic for duplicates)?

5. After updating search database (or change the algorithm) such that; how many pages no longer visited by bots? And how many of them are no longer entry points of traffic from organic results?

6. etc.

A list of amusing questions can be continued and each of us this list will be your...

What are the benefits of the service

the

-

the

- there is a system of event notification (for list of criteria) the

- there is no limit to the number of sites in one account the

- there is no point in looking for "a needle" in the stack of logs, the system will inform you about the event the

- analyzed and presented in a single interface, data on multiple search engines the

- have the ability to analyze not only the entire site, but only its slice, segment, etc. (based on regular expressions on url) the

- all data is stored in our cloud and history available since registration of the site in the system the

- reports enable you to prevent near-duplicate the

- if you regularly parse your site, we will notify who it is. You don't need to be constantly great logs the

- free service

In addition to simple and clear reports BotHunter daily checks on the integrity of robots.txt and sitemap.xml files on every website. About sitemap.xml a separate song, the file is checked for validity and conformity with the sitemap Protocol. The system writes a log of all inspections and the facts of the report generation on a daily basis.

What next

the

-

the

- identification of deviations from statistical norms indexing (your site) the

- recommendations for the settings of the index (given the characteristics of each site) the

- [collection] collection the key phrases that brought traffic to the site

- integration with Google Analytics to help predict loss of traffic (segments) the

- that is useful to professionals (your suggestions and ideas)

[extension collection] getting a list of key phrases, recommended site the

p.s. regarding performance characteristics, short:

the

-

the

- uses its own server group in DC Filanco the

- store and analyze all data in NoSQL, and more specifically — using MongoDB the

- your log files are not stored, only the results of processing the

- To authorize, use your profile on: facebook, Google or Yandex

The main objective of this post — to your Board.

Any data you would like to receive and in what form?

What ideas would you suggest?

Thank you in advance for constructive criticism...

Комментарии

Отправить комментарий